When does the self-driving car slow down at the vegetable store?

Do you know the difference between polar vegetable and image analysis algorithm? One measures a melon in pounds and the other in pixels. And what is the similarity? Both like the fruit to be as red as possible on the inside. The joke, of course, is not serious, but the topic is very serious - let's show how the melon got into the picture!

Do you know the difference between polar vegetable and image analysis algorithm? One measures a melon in pounds and the other in pixels. And what is the similarity? Both like the fruit to be as red as possible on the inside. The joke, of course, is not serious, but the topic is very serious - let's show how the melon got into the picture!

Perhaps the most impressive technological achievements of the 2010s are self-driving cars and image and facial recognition systems. Slowly for a decade, articles, TV reports and blog posts have appeared on the subject, which are often encountered not only by the IT profession, but also by the layman, so you can be relatively informed about the use. However, few people still know how these systems work, how a machine can “catch” what it sees in the live images or photos that are exposed to it.

In this post, we will help you understand a little about the basic principles of image processing and how algorithms find an object in the vast array of pixels. This was helped by an OpenCV (Open Source Computer Vision) package, which is available to anyone. In the article, after clarifying the basic concepts, we will introduce two procedures that can help the computer to find different objects, either in the same images or in the case of photos from completely different sources.

Let's start with the basics!

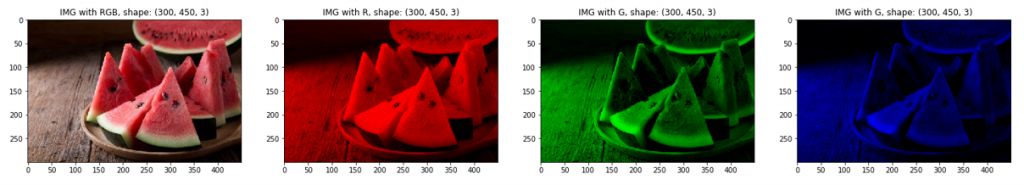

An image is pixels, that is, a set of pixels that are grouped into a matrix and display visually recognizable, interpretable shapes. The size of these matrices is influenced by the width and height of the image, and its layers are determined by the color scheme. The more important color scales are the so-called rgb, hsv, hls, luv and yiq. In the following, we will divide the images into elements using rgb, that is, the red, green and blue color system. In the case of the rgb system, the image matrix composition is as follows: the height of the matrix, its width and the number of color layers, which in addition to the present system is three. The elements of the layers are integers from 0 to 255. In the case of a black and white image, 0 represents completely dark, while 255 represents the white hue, and it is distributed similarly in the rgb color gamut: for the red hue, for example, 0 represents black, while larger values describe the strength of red.

The complex image recognition systems that underlie the operation of self-driving cars are capable of identifying various landmarks, vehicles, pedestrians, signs and road signs to recognize the lights of traffic lights. However, we will start from the basics, illustrating how the system works with the melon photo below.

As can be seen in the sample images — that is, the melon slices — the first image is the original, which contains all the layers of color, followed by the highlighting of the red, green and blue hues. It is clear that the dimensions of the image have not changed, however, for example, in the case of red, the elements of the other layers received a value of zero, that is, they have become completely black, so that the selected red layer can be displayed with the remaining layer. Of course, blue and green shades can be displayed using the same method.

In this article, I will describe two types of object search procedures, called template matching and feature matching.

Template matching, or object matching

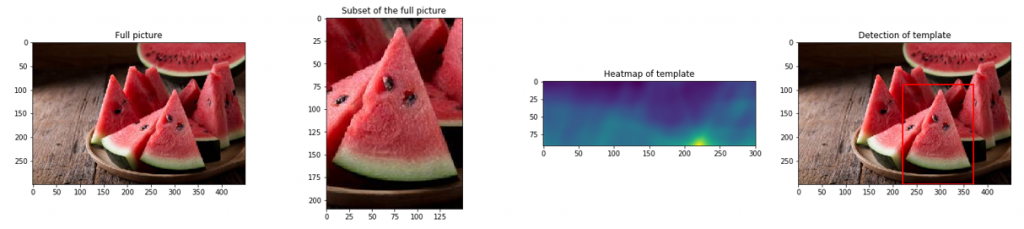

It is one of the simplest object search procedures, since a small detail of the entire image is the shape you are looking for in the image, so the small one is essentially part of the big picture.

In this case, it is enough to reconcile the pixels of the small and large image, for which we can apply several types of operations, however, the best known are the following:

- a correlation study between pixels or groups of pixels of two different images (a metric describing a linear relationship with a value between -1 and 1, where 1 is strong in the same direction, -1 is in the opposite direction, and 0 describes the absence of a relationship)

- differential calculation between groups of pixels in two images, where the error is 0, there will be the total match.

The next set of images contains the steps of the process, the first element of which is the whole image, from which comes the second image, which we also want to find in the entire image. The third image is a correlation image, which is a detail of the whole picture. On this you can clearly see that it shows in blue, where there is no pixel mismatch between the large and small image, however, in the part indicated in yellow we have the complete match. And the last image displays the result of the match now with a red frame indicating the position of the small image in the entire image.

The application of the simpler methodology presented may be sufficient in several situations, for example when we know that there are points on the studied image sets that are always constant, and a change can occur next to these objects, so that the novelty in the images can be determined and processed from the position of the constant shapes. Conversely, it can also be used as an indicator if we know that a change can only occur in a single matrix in an image, and not finding it means that the change has occurred.

Feature Matching, or Template Matching

In the case of template matching, there was no need to prepare the image when exploring similar pixels, however, if the image you are looking for is not part of the original, but comes from a completely different source, it is necessary to highlight the different properties. With their help, algorithms can more easily find similar units in images. Such preparations may include:

- Gray scaling, which can be used to determine the gradations of color shades. In this case, the image contains black, white and gray colors and only one layer, not three, as in the case of the rgb color system,

- blurring, smoothing: noise removal, performs pixel distribution on the entire image along a predefined matrix of a couple of pixels → as a result, we get the image fragments where the greatest light changes are found in the image. It is important to pay attention that as a result the image loses sharpness so it is necessary to determine the weight of these interventions taking this into account.

Feature matching is a set of different algorithms that can be used together to find similar units between two different but similar images. The following algorithms will help in this (the list is not exhaustive):

- Edge detection, or edge detection: it is of fundamental importance, since images can have high detail, so the algorithm is used to reduce this, with the help of which image processing is simplified by revealing the edges that are also important to us.

- contour detection: helps to determine the shape and extent of certain objects, helping to separate them from other objects.

The application of feature matching consists of two points. First of all, you will use the tools listed earlier to reveal the key parts in the images for both images. These can be different edges, contours. It then compares the key elements defined for the two images and records all matches. Since it examines several key elements for both images, there may be worse and better matches for the two images in several cases. It is advisable to select only the best matches after the process.

In the images shown above, the aforementioned gray scaling and pixel averaging have been done. The reconciliation can be said to be successful, since it found matches for both the core and the shell, even if the images had completely different parameters and were taken under different conditions.

Self-driving cars, of course, use much more complex and sophisticated systems, but the basics are made up of these processes. With the help of these applications, it is able to determine the lanes, classifying the recognized signs and a set of other basic functions, which is necessary for safe driving. Maybe one day you'll stop by the roadside melon vendor if the driver wants a honey-sweetened Greek — because we already know that technology can recognize melons.